Medallion Architecture

Medallion Architecture- As a Founder and Data Warehouse Architect at DataKrypton.ai, I’ve seen countless startups make the same costly mistake: running analytics directly on the transactional databases where their products live. It seems convenient at first—no extra infrastructure, no data pipelines to maintain—but the hidden costs quickly outweigh the upfront savings.

The Hidden Dangers of Analytics on Transactional Systems

• Performance Issues

Analytics workloads tend to be read-heavy and resource-intensive. Long-running SQL queries for reports or dashboards can consume CPU, memory, and I/O bandwidth, slowing down the very operations that keep your product responsive.

• Data Risks

Mixing OLTP and OLAP workloads increases the chance of locking conflicts, partial reads, and even data corruption when complex joins touch live tables. Without a clear separation, it’s hard to enforce data governance and lineage.

• Scalability Problems

As your user base grows, so do your data volumes and analytics demands. Transactional systems scale differently than data warehouses—and hitting those limits can stall feature development and marketing analytics alike.

Why You Should Invest in a Dedicated Data Warehouse

Switching to a purpose-built data warehouse isn’t just an expense—it’s an investment that pays off in three key ways:

1. Labor Savings

Automate ingestion, cleansing, and transformation. Engineers spend less time debugging slow queries or fighting locks, and more time delivering high-value insights—saving tens or even hundreds of thousands in operational costs.

2. Operational Efficiency

Data warehouses are optimized for complex analytics. Features like columnar storage, adaptive caching, and materialized views let you run reports in seconds without touching production databases.

3. New Insights

With a structured analytics environment, you can implement advanced capabilities—machine learning, real-time streaming metrics, or cross-source joins—that would be impractical on OLTP systems.

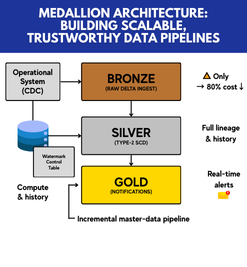

Embracing Medallion Architecture for Reliable Data Journeys

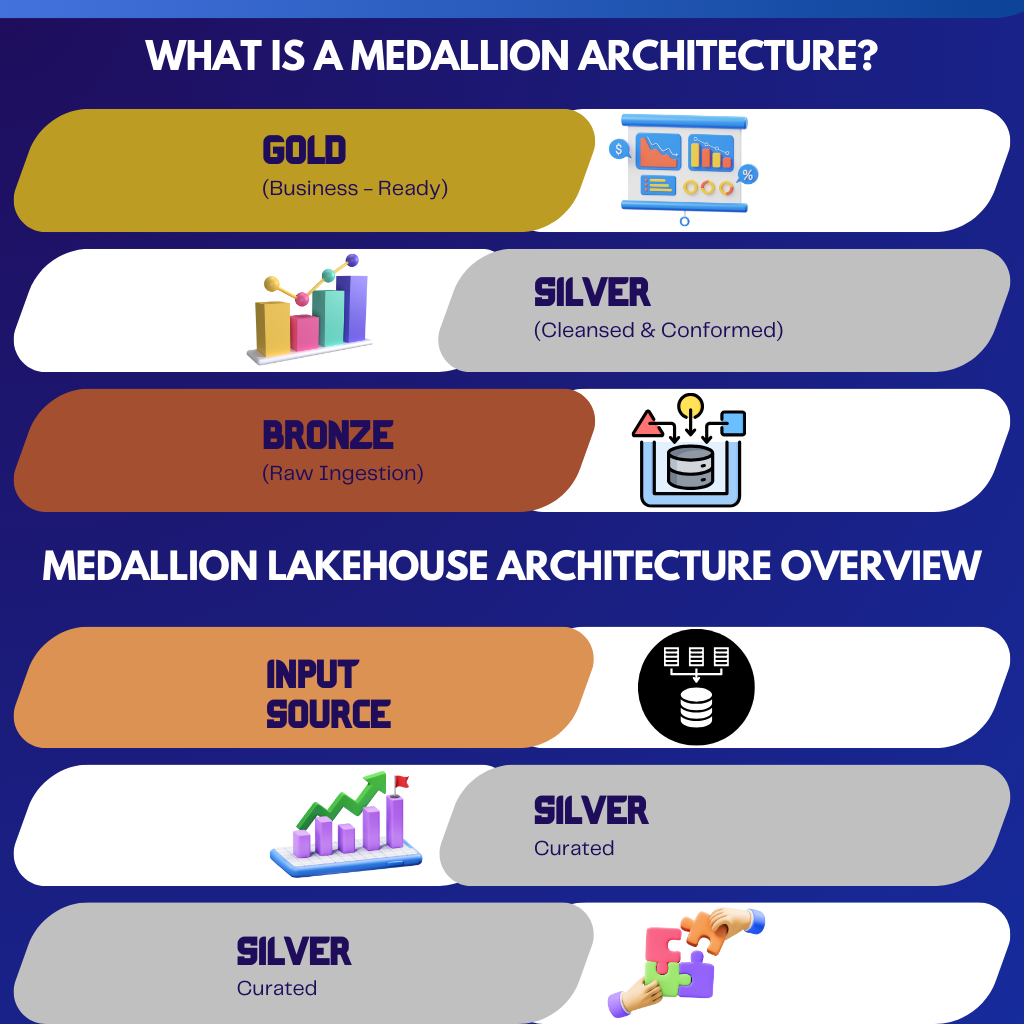

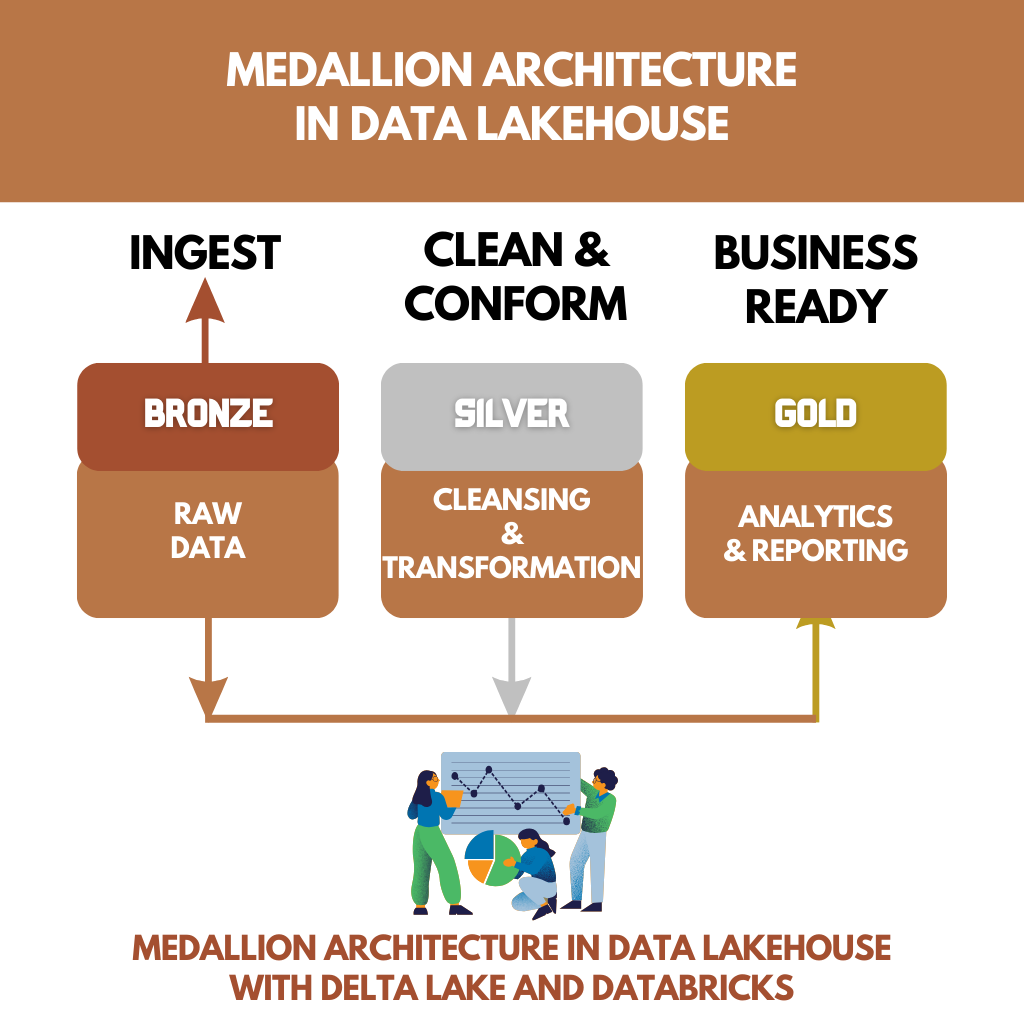

A modern best practice in cloud data engineering is the Medallion Architecture, which organizes your data warehouse into three incremental “layers” to guarantee quality and traceability at each stage:

• Bronze (Raw Ingestion)

Ingest raw data as-is from source systems—transaction logs, event streams, third-party APIs—without transformations.

• Silver (Cleansed & Conformed)

Apply schema enforcement, deduplication, and enrichment. Link together related datasets (e.g., customers to orders) to create reliable, query-ready tables.

• Gold (Business-Ready)

Build aggregates, ML features, and domain-specific tables (e.g., sales by region per week) for consumption by BI tools and data scientists.

Why Modular Beats Monolithic Every Time

Traditional monolithic data platforms bundle ingestion, transformation, storage, and serving into one codebase or appliance. In contrast, a modular data architecture breaks work into independent, reusable components:

• Decoupling

You can upgrade one module—say, your streaming ingestion—without impacting batch transformations or BI dashboards.

• Agility

Small, focused teams own individual pipelines or schemas, speeding development and reducing coordination overhead.

• Maintainability

Isolated modules mean smaller codebases, clearer interfaces, and easier testing. You can version-control each stage (bronze, silver, gold) independently.

• Cost Optimization

Spin up compute only for the workloads you need; retire modules that become obsolete without massive refactoring.

Getting Started with a Modular Medallion Approach

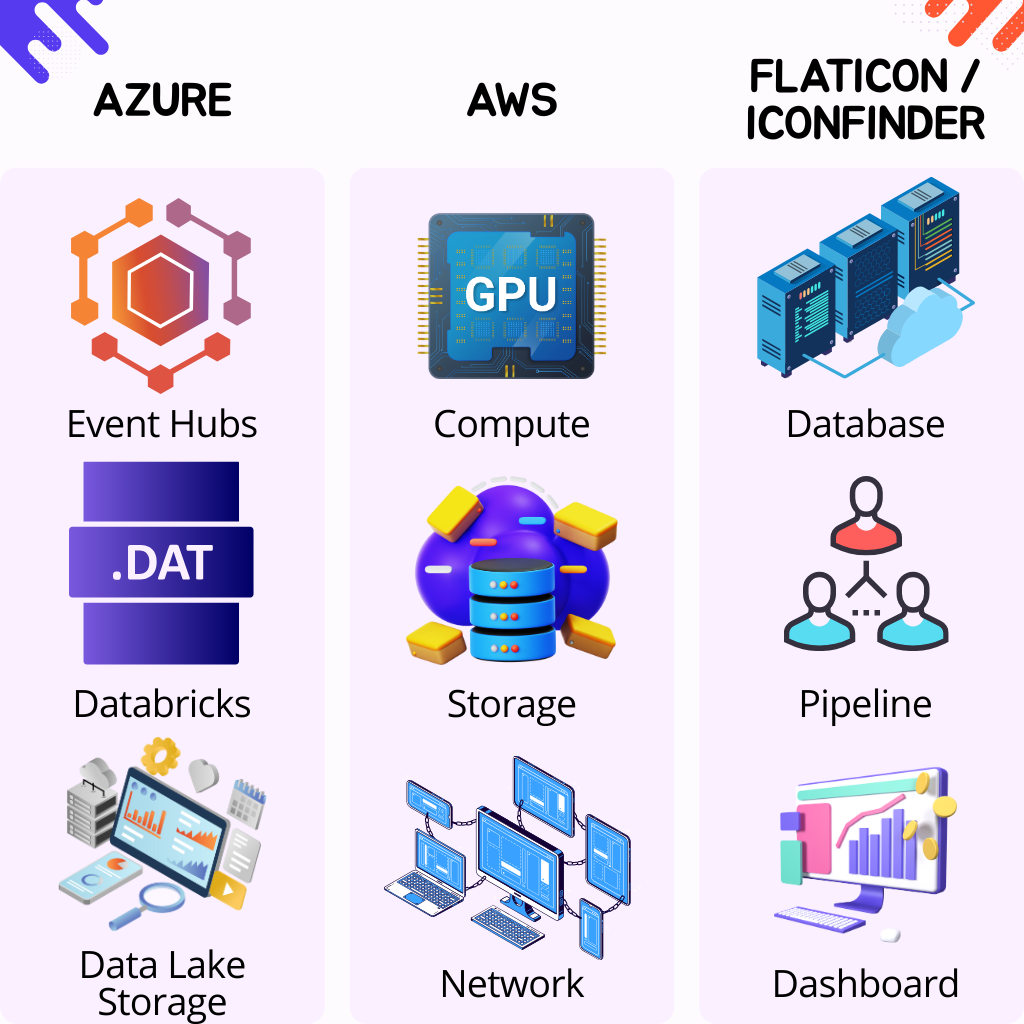

1. Choose Your Cloud Stack

Platforms like Databricks, Snowflake, or BigQuery provide built-in support for layered data lakes and warehouses.

2. Define Your Bronze, Silver, Gold Schemas

Treat each layer as its own database or schema. Version your table definitions in Git.

3. Build Reusable Pipelines

Leverage orchestration tools (e.g., Airflow, Azure Data Factory) to parameterize ingestion, transformation, and load tasks.

4. Monitor and Govern

Implement automated tests, data quality checks, and observability dashboards to catch issues early.

Don’t let short-term convenience become a long-term liability. By adopting a dedicated data warehouse underpinned by a modular Medallion Architecture, you’ll unlock faster analytics, safer data practices, and scalable growth—giving your startup the solid foundation it needs to thrive. At DataKrypton.ai, we help you design and implement these best practices so you can focus on what you do best: building great products.