Accelerating Master Data Updates with Incremental Loads & Real-Time Alerts in Medallion Architecture

Accelerating Master Data Updates with Incremental Loads & Real-Time Alerts in Medallion Architecture

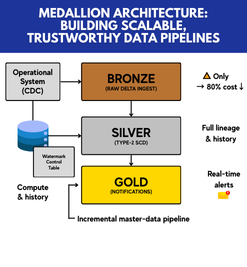

Keeping your master data fresh and your teams informed is crucial for enterprise analytics. By combining Delta Lake’s CDC features with a layered Medallion Architecture, you can achieve high-performance ingestion, robust history tracking, and automated notifications—all without reprocessing terabytes of data.

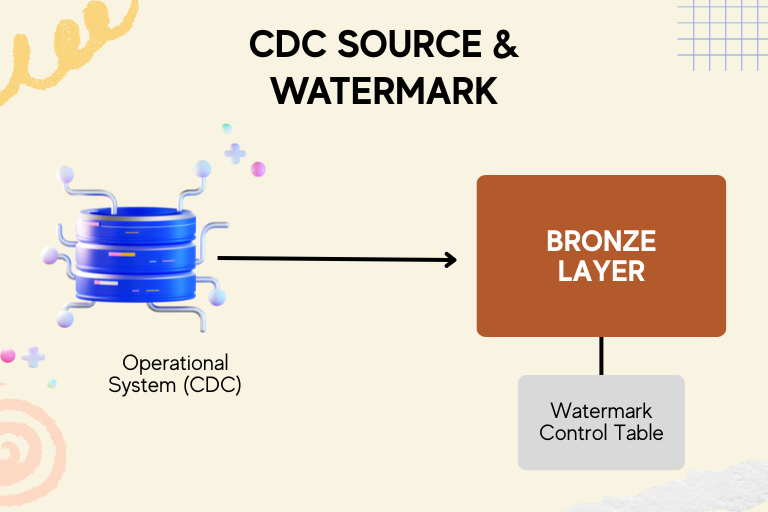

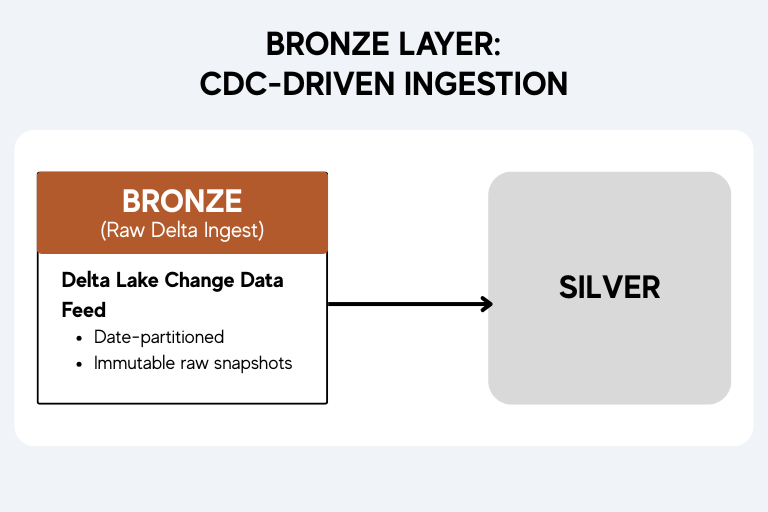

1. Bronze Layer: CDC-Driven Ingestion

- Delta Change Data Feed: Leverage Delta Lake’s built-in CDC to capture inserts, updates, and deletes from your operational system.

- Watermark Control Table: Maintain a control table that stores the maximum last_updated timestamp processed. On each run, query only records newer than this watermark.

- Partitioned Landing Zone: Write raw deltas into a date-partitioned Bronze table for immutable auditability.

Key SQL Snippet

MERGE INTO bronze.master_raw t

USING (

SELECT * FROM source_system.cdc_feed

WHERE last_updated > (SELECT last_ts FROM control_master)

) s

ON t.id = s.id

WHEN NOT MATCHED THEN INSERT *

WHEN MATCHED AND t.hash <> s.hash THEN INSERT *; — history capture

2. Silver Layer: Type-2 SCD & History

- Delta MERGE for SCD-2: Use Spark’s optimized Delta merges to upsert only the changed records into a Type-2 SCD table. This preserves full change history while marking the current version as active.

- Efficient Processing: Since Bronze only contains deltas, the Silver job runs in minutes, not hours, dramatically reducing compute costs.

Example

PySpark

bronze_delta = spark.table(“bronze.master_raw”)

silver = spark.table(“silver.master_scd”)

bronze_delta.alias(“src”) \

.merge(

silver.alias(“tgt”),

“src.id = tgt.id AND tgt.active = true”

) \

.whenMatchedUpdate(

set={“active”: F.lit(False), “effective_end”: F.current_date()}

) \

.whenNotMatchedInsertAll() \

.execute()

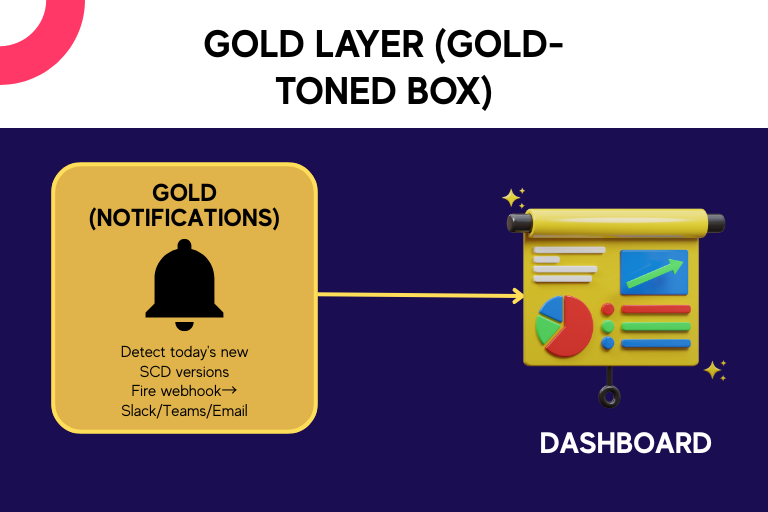

3. Gold Layer: Event-Driven Notifications

- Change Detection Job: After the Silver merge, run a lightweight Spark or Python job that filters for records with today’s effective_start.

- Webhook Integration: Dispatch JSON-formatted payloads via Slack, Teams, or email using a Databricks notebook webhook or a serverless function (Azure Function/AWS Lambda).

- Digest Report: Include counts, sample records, and links to BI dashboards so business users can act immediately.

Pseudo-Code

updates = spark.table(“silver.master_scd”) \

.filter(“effective_start = current_date()”)

if updates.count() > 0:

payload = {

“text”: f”{updates.count()} master records updated”,

“samples”: updates.limit(5).toJSON().collect()

}

requests.post(WEBHOOK_URL, json=payload)

Benefits & Best Practices

- Lean Compute: Process only deltas—cutting costs by up to 80%.

- Auditability: Bronze raw logs + Silver SCD-2 ensure a bulletproof lineage.

- Proactive Alerts: Business teams stay informed in real time, improving data trust and adoption.